Radioactive rocks were front and center during the late 1940s and early 1950s, as Colorado, Utah, and New Mexico hosted what was called the “Great Uranium Rush,” the last mineral rush in which individual prospectors had a chance to strike it rich. The quest was for, in the parlance of that era, “hot rocks”— rocks emitting elevated levels of radioactivity that might indicate a uranium deposit worth millions of dollars.

A few prospectors did indeed make their fortunes. Still, most received their reward by participating in an adventure that thrilled the nation and introduced words and terms like “radioactivity,” “Geiger counter,” and “radiometric prospecting” into the general vocabulary.

Although finding a million-dollar uranium deposit today is unlikely, understanding radioactivity and knowing how to detect it can greatly enhance the mineral-collecting experience. Radioactivity is one of the fascinating physical properties of minerals. It is ionizing energy in the form of particles and rays produced by the spontaneous disintegration or “decay” of unstable atomic nuclei.

Understanding & Identifying Radioactivity

While this definition might seem a bit intimidating, getting a practical handle on radioactivity is not that difficult. Admittedly, the word is loaded with negative connotations linked to nuclear weapons, fallout, toxic waste disposal, reactor meltdowns, and the hazards of radon gas. Nevertheless, radioactivity is very much a part of the natural world, especially the world of mineralogy.

and thorium; it is slightly radioactive and is subject to metamictization.

THE ARKENSTONE GALLERY OF FINE MINERALS, IROCKS.COM

Minerals are described as radioactive when they emit energy in the forms of alpha, beta, or gamma radiation. “Radiation” is the catchall term for energy in the form of waves or particles. Gamma rays make up the extreme high-frequency, shortwave end of the electromagnetic spectrum, broadband of radiation energy that includes radio waves, microwaves, infrared, visible light, ultraviolet, and X-rays.

Alpha and beta particles are not forms of electromagnetic energy. Alpha radiation refers to positively charged, high-energy, low-mass particles that consist of two neutrons and two protons (the nuclei of helium atoms). Beta particles can be negative or positive; negatively charged beta particles are high-speed electrons, while positively charged beta particles are positrons (the “antimatter” counterparts of electrons).

Exploring Ionization

Alpha particles, beta particles, and gamma rays (along with X-rays) are classified as “ionizing” radiation, meaning that they have sufficient energy to ionize atoms in the materials they strike. Atoms become ionized when they lose electrons and assume a net positive charge. Because it disrupts normal biochemical functions on the molecular and atomic levels, ionizing radiation can be harmful to living tissue. Ionizing radiation is produced by nuclear fusion, nuclear fission, and atomic decay, the latter being the natural disintegration of the nuclei of unstable, heavy elements or isotopes (elements with different numbers of neutrons).

Ionizing radiation can be cosmic, man-made, or geophysical in origin. The sun, a giant nuclear fusion furnace that emits intense gamma radiation, provides most of our cosmic radiation. Fortunately, very little reaches the Earth’s surface because of its distance from the sun and atmospheric absorption. During the past 80 years, the Earth’s cumulative environmental radiation load has increased significantly due to uranium mining and processing, nuclear weapons manufacture and resting, nuclear power and X-ray generation, accidental radiation releases, production of radioactive isotopes for medical and industrial uses, and radioactive waste disposal.

Geophysical Radiation

Mineral collectors, rockhounds, and prospectors are most interested in geophysical radiation, which is emitted by natural radioactive elements that are present in minerals as essential or accessory components. Most geophysical radiation is produced by uranium and thorium, which occur in trace amounts in many igneous rocks, especially granite. The effects of geophysical radiation go far beyond surface radioactivity. An estimated 80 percent of the Earth’s internal heat is produced by the atomic disintegration of uranium, thorium, and the elements and isotopes in their atomic-decay chains.

Of the 92 naturally occurring elements, 11 are radioactive. Of these, only uranium and thorium are relatively abundant. Uranium was identified as an element in 1789; it was isolated in 1841 as a very dense, silvery-white metal that oxidizes rapidly in air. Ranking 51st in crustal abundance, uranium is about as common as tin.

Thorium, discovered in 1828, is similar in appearance to uranium but is half as dense and much more common. Until the discovery of radioactivity, uranium and thorium were little more than laboratory curiosities. Small quantities of uranium oxides were used to color glass yellow, while thorium compounds that incandesce (emit visible light) when heated were employed in gas-lantern mantles.

Driven by Discoveries

mines in Colorado, Utah, and New

Mexico are excellent sources of

radioactive mineral specimens.

The discovery of radioactivity followed investigations into the mysterious, penetrating “invisible energy” that was produced by passing an electrical current through vacuum-discharge tubes. In 1895, the German physicist Wilhelm Conrad Röntgen (1845-1923) named this energy “X-rays” to signify its unknown nature. Radioactivity was accidentally discovered in 1896 when French physicist Antoine-Henri Becquerel (1852-1908) studied the effects of X-rays and sunlight on potassium uranyl sulfate, a compound that fluoresced in direct sunlight. Becquerel placed this compound atop photographic plates wrapped in lightproof black paper, then exposed it to sunlight.

He noted that the photographic plates became exposed and attributed this to some type of penetrating energy related to fluorescence.

When cloudy weather delayed his experiments, Becquerel stored both the uranium compound and the unexposed, wrapped photographic plates together inside a dark desk drawer. Later, out of curiosity, he developed the plates and found they had already been exposed. This exposure meant that the uranium compound—without any induced fluorescence—continuously emitted invisible, penetrating rays. Becquerel then demonstrated uranium itself, not its compounds, was continuously emitting these rays, which became known as “uranium rays” or “Becquerel rays.”

Marie Curie & Ernest Rutherford

Among the first to investigate these rays was Marie Curie (1867-1934), the Polish-born French chemist and physicist who coined the term “radioactivity.” In 1898, after extracting uranium and thorium from uraninite (uranium oxide), Curie was surprised to find that the uraninite was still highly radioactive. Concluding that it must contain additional sources of radioactivity, she extracted two previously undiscovered radioactive elements—polonium and radium. The radium was particularly interesting because of its extraordinarily intense radioactivity.

In 1902, British physicist Ernest Rutherford (1871-1937) proposed that radioactivity consists of what we now know as alpha and beta particles, and gamma rays. He found that alpha and beta particles lose their energy relatively quickly as they pass through materials, while gamma rays have a far greater penetrating power. Until the discovery of radioactivity, most scientists believed that the smallest particle of matter was the atom, which was indivisible and unchangeable. But Rutherford challenged the idea of atomic indivisibility by proposing that alpha and beta particles were subatomic components of disintegrating atoms. This concept opened the door to modern particle physics and an entirely new understanding of the nature of matter and energy.

Early 20th Century Proves Progress

The early 1900s saw many exciting discoveries about radioactivity. While working with thorium, Rutherford had detected radioactivity throughout his laboratory—even after the thorium had been removed. He deduced that this radioactivity came not from the thorium itself, but from a gaseous product of thorium’s atomic disintegration. This realization led to the discovery of another radioactive element—radon.

Rutherford then postulated that radioactive elements spontaneously and continuously disintegrate to release radiation and produce a decay chain of other radioactive elements and isotopes. He also learned that radon’s intense radioactivity decreased by half every few days. His term “half-life” is now used to describe the speed at which unstable atoms undergo atomic disintegration.

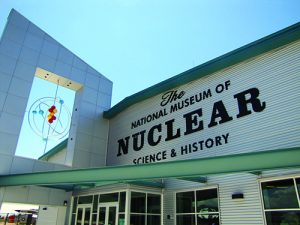

Albuquerque, New Mexico, has many interesting exhibits

about the history of radioactivity.

Rutherford observed that an inverse relationship existed between half-life and the intensity of radioactivity. Uranium, with its low level of radioactivity, has a very long half-life of more than four billion years. But extremely radioactive elements such as radium and radon have very short half-lives. Unfortunately, the effects of ionizing radiation on living tissue were not understood. While exposure to radioactivity seemed to halt the growth of certain cancers, it also caused burns and open lesions on the skin of many researchers. Nevertheless, hopes that radiation would cure cancer and boost general well-being created a huge demand for radium, some for research purposes, but mostly to be used in patent medicines and bizarre therapeutic devices.

Mining Uranium Ore

The aspect of research triggered the first significant mining of uranium ore—not for uranium, but the ore’s tiny traces of radium. The important radium sources were uraninite from the historic Joachimsthal mines in what is now the Czech Republic and the carnotite (hydrous potassium uranium vanadate) ores of western Colorado. By 1912, radium was the most valuable commodity in existence and cost $100,000 per gram—nearly $2.5 million in today’s currency.

Initially, radioactivity could only be detected with photographic plates and fluorescent screens; it could be crudely measured with gold-leaf electroscopes and complex, piezoelectric-quartz devices. Then in 1908, German physicist Hans Geiger (1882-1945) constructed a sealed, thin metal cylinder with a wire extending down its center. After filling the tube with inert gas, he applied an electrical voltage almost strong enough to pass between the electrodes, in this case, the wire and the tube walls. When exposed to radioactivity, the gas ionized to become conductive, completing the circuit and producing an audible click. These electrical discharges instantly returned the gas ions to their normal energy level, making it possible to continuously and immediately detect additional radioactivity and measure its intensity by “counting.”

Although the first “Geiger counters” were ponderous instruments sensitive only to alpha particles, they were vital to the early studies of radioactivity. In 1928, Geiger and his colleague Walther Müller designed a new tube. Now known as the Geiger-Müller counter, it is sensitive to all forms of radioactivity and is still used today.

Greater Understanding and More Utilization

popular radioactivity-detection instrument.

The uses, perception, and importance of radioactive minerals changed radically during World War II when uranium became the source of its fissionable U-235 isotope needed for the first atomic bombs. Following the war, the United States government subsidized the “Great Uranium Rush,” in which improved, lightweight, shoe-box-sized Geiger-Müller counters were the key tools for the thousands of radiometric prospectors who searched for “hot rocks,” mainly uraninite and carnotite. The radioactivity emitted by uranium and thorium has several effects on minerals, one of which is color alteration.

Long-term exposure to low-level radioactivity can disrupt normal electron positions in the crystal lattices of certain minerals. This activity creates electron traps, called “color centers,” that alter the mineral’s color-absorption-reflection properties. The colors of smoky quartz, blue and purple fluorite and halite, brown topaz, and yellow and brown calcite are often caused by exposure to geophysical radiation.

world’s fi rst atomic detonation at New Mexico’s Trinity Site, still

exhibits radioactivity.

Metamictization

Another interesting effect is metamictization, which occurs in some minerals that contain accessory amounts of uranium or thorium. In metamictization, geophysical radiation displaces electrons to slowly degrade the host mineral’s crystal structure. Metamictization is usually apparent in crystals as rounded, indistinct edges, curving faces, and decreased hardness and density. Metamictization can sometimes completely degrade crystals into amorphous masses.

Metamictization is common in the rare-earth minerals gadolinite (rare-earth iron beryllium oxysilicate) and monazite (rare-earth phosphate). Because of their similar atomic radii, uranium and thorium often substitute for rare-earth elements to make their minerals radioactive. California’s huge Mountain Pass rare-earth-mineral deposit was actually discovered by a uranium prospector equipped with a Geiger-Müller counter.

Zirconium Silicate

Zircon, or zirconium silicate, another mineral subject to metamictization, has an additional connection to radioactivity and is employed in radiometric dating, which uses known rates of atomic decay to determine the age of ancient rocks. Because of similar atomic radii, uranium substitutes readily for zirconium in zircon. The uranium-238 isotope has an extremely long half-life of 4,468 billion years. The inert, extremely durable zircon “protects” the traces of uranium—an ideal combination for the radiometric dating of ancient rocks.

When igneous rocks solidify from magma, their contained traces of uranium have not yet begun to decay. By measuring the extent of atomic decay, geophysicists can determine when the sample crystallized. The oldest known rocks are found in Australia. Based on partially decayed traces of uranium-238 contained in tiny zircon crystals, these rocks have been dated at 4,374 billion years—only a few hundred million years after the formation of the Earth itself.

Detecting Radioactivity

Today, mineral collectors have access to a wide range of radioactivity-sensing instruments, including dosimeters that measure cumulative radiation exposure, miniaturized Geiger-Müller counters, and scintillators that quantitatively measure geophysical radioactivity, and radiation monitors that measure relative overall radioactivity. Prices for basic instruments begin at about $40, while top-of-the-line, quantitative instruments can cost thousands of dollars.

Choosing the Radiation Monitor For You

For general mineral-collecting and amateur radiometric-prospecting uses, radiation monitors, which cost from $200 to $700, will suffice. I’m familiar with the Radalert™ radiation monitor manufactured by International Medcom of Sebastopol, California. It weighs 10 ounces and contains a miniaturized Geiger-Müller tube. Alpha and beta particles, gamma rays, and X-rays ionize the tube’s gas atoms, causing the tube to discharge with tiny electrical pulses. Integrated circuits convert these pulses to liquid-crystal displays, flash light-emitting diodes, and generate audible clicks.

This instrument detects total ionizing radiation (a mix of geophysical,

operate, provide relative measurements of radioactivity.

cosmic, and man-made radiation) and provides relative, rather than absolute or quantitative, radioactivity measurements. It is ready for use after quickly determining the local background radiation “load,” which varies with geology, solar-flare activity, and elevation.

At sea level, the normal background radiation might be roughly 13 counts per minute. But at a mountain elevation of 7,000 feet where there is less atmospheric shielding of cosmic radiation, the background level might be 30 counts per minute. Radiation monitors can even detect temporarily elevated levels of cosmic radiation due to increased sunspot activity.

Background Radiation

Background radiation also varies with local geology. Radiation levels near granite outcrops are usually higher than in other areas because of traces of uranium within the granite. Radiation monitors can serve as a safety tool to detect elevated levels of radioactivity from potentially hazardous accumulations of radon gas in living spaces. They can also detect the very low levels of alpha radiation emitted by household smoke detectors.

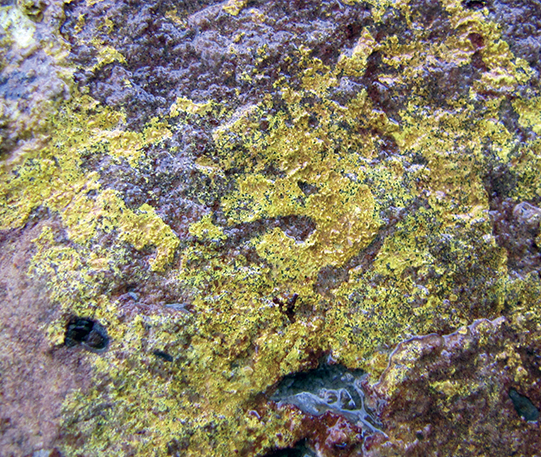

in western Colorado is

filled with specimens of

radioactive minerals.

Smoky quartz sometimes has detectable traces of radioactivity, while gadolinite, monazite, and other rare-earth minerals have levels that are easily detectable. When used with such uranium-bearing minerals as canary-yellow carnotite and tyuyamunite, yellowish-green-to-green autunite, and green torbernite, radiation monitors “sound off” with hundreds or thousands of counts per minute.

Among the interesting radioactive collectibles is yellow “uranium glass,” which was popular in the early 1900s and still emits detectable levels of radioactivity. Another is greenish trinitite, quartz sand that was fused together by the world’s first atomic detonation on July 16, 1945, at New Mexico’s Trinity Site. Trinitite specimens, which are still sold today, have low but easily detectable levels of radioactivity.

Proper Handling

Collecting radioactive minerals is not dangerous when precautions are followed. One rule is to collect small specimens. Cumulative radiation and the amount of radon gas emitted by radioactive specimens are directly proportional to specimen size. There is no need to collect cabinet-sized specimens of carnotite, even though they are easily found on mine dumps.

Handle radioactive specimens minimally and always wash hands thoroughly afterward. Never eat, drink, sleep or, smoke around radioactive specimens, and always keep them out of the reach of children. Also, radioactive specimens should be clearly labeled as such and stored in well-ventilated spaces away from living areas.

of bright-yellow

tyuyamunite (hydrous

calcium uranyl

vanadate) is highly

radioactive.

Radiation monitors can add a new dimension to many field-collecting trips. And they are an absolute necessity when exploring the thousands of uranium mine dumps scattered across the Four Corners regions of Colorado, Utah, and New Mexico. Radiation monitors make the difference between finding nice specimens of brightly colored, oxidized uranium minerals and finding nothing at all.

Collectors should never enter an abandoned mine, but abandoned uranium mines are particularly hazardous. These unventilated mines have accumulated extremely high concentrations of intensely radioactive radon gas.

Anyone interested in the history of radioactivity will enjoy visiting these two New Mexico museums: The Bradbury Science Museum at Los Alamos National Laboratory in Los Alamos, and the National Nuclear Museum of Science and History in Albuquerque. Both contain a wealth of exhibits and information on radioactivity—one of the fascinating physical properties of minerals.

This story about radioactive rocks previously appeared in Rock & Gem magazine. Click here to subscribe. Story & photos by Steve Voynick unless otherwise indicated.